It’s been a while since I last wrote here, meanwhile a lot has happened. On a global scale we’re going through a pandemic, on a personal level, I’ve left academia to start a quantum computing company.

A while ago I heard Carl Caves say something like “You should work on what you consider to be the most important problem in physics”. Most important is of course subjective. To me it has always been about understanding and pushing the limits of quantum mechanics while trying to stay within the realm of what we can actually test in experiment. Large quantum computers would open a new realm of science, pushing quantum theory far beyond anything that nature can demonstrate. And so, building these machines and scaling them up is certainly the most exciting science we can do today.

Ok, but we already know that…

I have to be realistic, building a quantum computer is really hard, also there are many good people doing that, so where can I contribute? This was the question my co-founders and I were asking ourselves about a year ago. Before giving my answer I want to first define the goal: building a large quantum computer.

But what is large?

Going back to the original goal, I would like to see a machine that could potentially demonstrate radically new physics. But that’s difficult to define, perhaps we would know it when we see it, but where can we aim that’s more concrete?

Since we are talking about a computer, what we want is machines that perform computations which are essentially impossible on a classical computer (AKA quantum supremacy). It’s a good first step but it’s not good enough. There are many things in nature that cannot be simulated on classical computers. One can make the point that quantum supremacy does not offer anything more interesting than a chemical reaction in terms of its ability to demonstrate radically new physics. What we need is some additional criteria which is difficult to define precisely but would ultimately mean one of two things:

- Using quantum computers to answer questions that cannot be answered in a lab.

- Using quantum computers to search for violations of known physics.

We are still (in my opinion) many years away from taking either of these seriously, however we are near the stage where quantum computers can become useful scientific tools that can at the very least be more efficient than some experiments in terms of manpower, money and time. To me, that is the real first step in building machines that would eventually open a new realm of science and technology. I am optimistic that this first step would also open the door for other use cases and I believe that we are close.

Building quantum computers

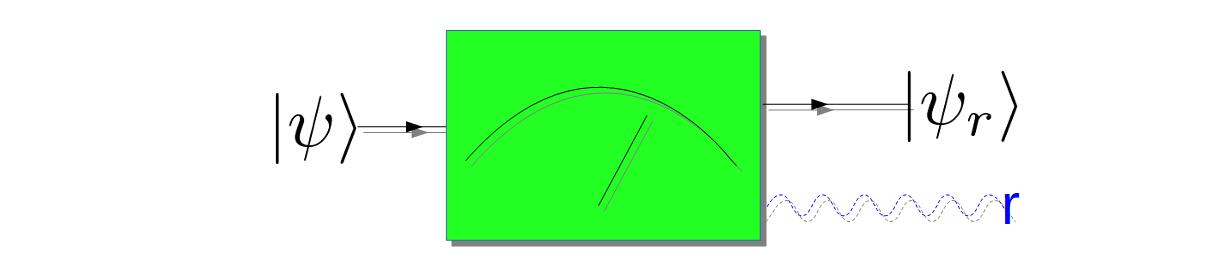

There are generally two main ways to improve the performance of a quantum computer. Increase the number of qubits and improve the control over these qubits. The problem is that it’s difficult to do both, in fact, more qubits means more control issues, and it’s difficult to decide which is more important. The obvious solution is to focus on small high-quality devices and then interconnect them. This of-course comes with two difficulties, building the interconnects and dealing with connectivity issues. That’s where Entangled Networks comes in.

We decided that since the most important problem is scaling quantum computers, we should focus on the best solution, interconnecting small high-quality processors. It’s an exciting problem and one that needs to be solved as soon as possible in order to build quantum computers that would be of interest to the general community. It is also a problem that can be solved within a reasonable time-frame. I am happy to report that we are making good progress. On the personal level it’s a great ride with many ups and downs (as expected), new challenges, and lots of fun.